Introduction

As Artificial Intelligence (AI) continues to evolve, its integration into everyday life is raising critical ethical concerns. Among the most pressing issues in AI Ethics are bias and fairness in AI systems. From hiring decisions to loan approvals and healthcare diagnostics, AI-driven algorithms significantly impact people’s lives. However, these systems are not always impartial—they often reflect biases inherent in the data they are trained on.

Understanding the ethical implications of AI and ensuring fairness in AI systems is crucial to building trust and avoiding discrimination. In this article, we will explore the challenges of bias and fairness in AI systems, their real-world consequences, and the steps we can take to create ethical AI.

Table of Contents

Understanding AI Bias: How Does It Happen?

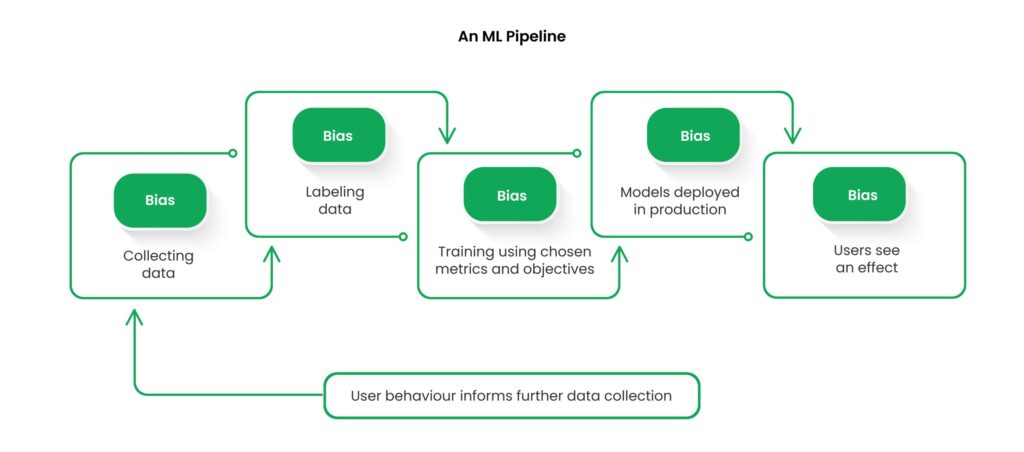

AI bias occurs when machine learning models produce prejudiced or unfair outcomes, often due to biased training data, flawed algorithms, or human oversight. Bias in AI can manifest in multiple ways, including racial, gender, socioeconomic, and political biases.

Types of AI Bias

- Data Bias: When training data is incomplete, unrepresentative, or skewed towards certain demographics.

- Algorithmic Bias: When the design of an AI algorithm systematically disadvantages certain groups.

- User Bias: When biased human decisions influence the way AI models are trained or deployed.

- Interpretation Bias: When results are misinterpreted, leading to biased conclusions.

Examples of AI Bias in the Real World

- Facial Recognition Bias: AI-powered facial recognition systems have been found to misidentify individuals with darker skin tones at higher rates.

- Hiring Algorithms: Some AI recruitment tools have shown gender bias, favoring male candidates over female candidates.

- Loan Approval Systems: Biased data in credit scoring models has led to discrimination against minority groups.

- Healthcare Diagnosis: AI-driven medical diagnosis systems may underperform for certain ethnic groups due to a lack of diverse data.

The Impact of Bias in AI Systems

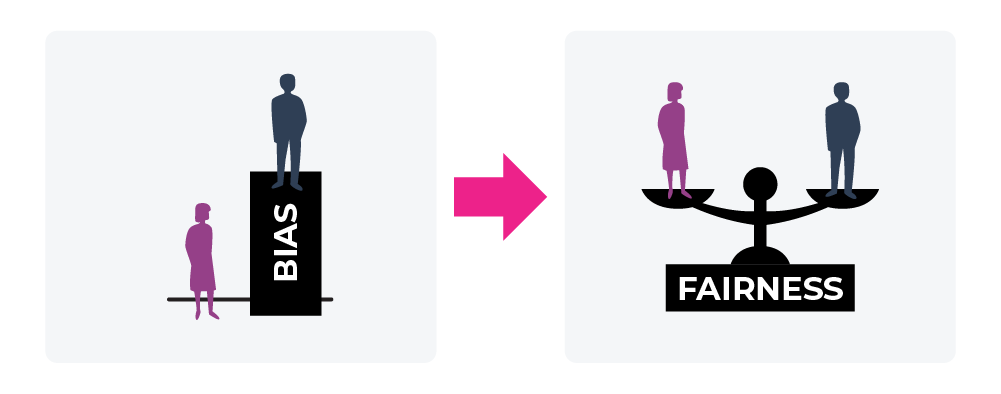

AI bias is not just a technical issue—it has real-world consequences that can lead to discrimination, reinforce inequalities, and damage public trust in AI technologies.

Key Consequences of AI Bias:

- Social Inequality: AI-driven decisions can widen existing inequalities by favoring privileged groups.

- Legal & Ethical Risks: Organizations deploying biased AI systems may face lawsuits, reputational damage, and regulatory penalties.

- Loss of Trust: If users perceive AI systems as unfair, they may reject or distrust AI-based decision-making.

- Economic Consequences: Biased AI systems can lead to financial losses for businesses by making poor predictions or excluding valuable talent.

Ensuring Fairness in AI Systems

Achieving fairness in AI systems requires proactive efforts in data collection, model development, and ethical AI governance. The following strategies can help mitigate bias and promote fairness.

1. Improving Data Quality

- Collect diverse and representative datasets that include different demographic groups.

- Reduce historical biases by auditing training data for skewed patterns.

- Ensure data transparency so that AI developers can trace potential biases.

2. Algorithmic Transparency & Explainability

- Use explainable AI (XAI) techniques to understand how AI models make decisions.

- Ensure interdisciplinary AI development, incorporating experts in ethics, law, and social sciences.

- Implement bias detection tools to identify and correct discriminatory patterns.

3. Fairness-Aware Machine Learning Models

- Apply fairness constraints when designing AI models to balance decision-making.

- Use adversarial debiasing techniques that detect and minimize bias.

- Conduct regular audits of AI models to evaluate fairness across different groups.

4. Ethical AI Regulations & Policies

- Follow ethical AI frameworks such as the EU AI Act, OECD AI Principles, and AI Ethics Guidelines by IEEE.

- Implement corporate AI governance to ensure compliance with fairness standards.

- Encourage human oversight in AI-based decision-making to correct biased outputs.

Case Studies: How Companies Are Addressing AI Bias

1. Google’s AI Fairness Initiative

Google has launched AI ethics research programs focusing on algorithmic fairness, transparency, and inclusivity. They are developing tools like TCAV (Testing with Concept Activation Vectors) to interpret AI model behavior and reduce bias.

2. IBM’s AI Ethics Guidelines

IBM has created AI Fairness 360, an open-source toolkit to help developers detect and mitigate bias in machine learning models. They also advocate for responsible AI practices through partnerships with policymakers.

3. Microsoft’s Responsible AI Practices

Microsoft has implemented AI ethics review boards and fairness-aware ML models to prevent discriminatory outcomes in their AI-driven services like facial recognition and language processing.

The Future of AI Ethics: Moving Towards Responsible AI

As artificial intelligence (AI) continues to evolve, ethical concerns surrounding its deployment and decision-making processes become increasingly critical. Ensuring responsible AI development requires addressing issues such as bias, transparency, privacy, and accountability. The future of AI ethics will be shaped by technological advancements, regulations, and collaborative efforts from policymakers, businesses, and researchers.

Key Ethical Challenges in AI

- Bias and Fairness

AI models can inherit biases from training data, leading to unfair and discriminatory outcomes. Future AI systems must implement bias detection and mitigation strategies to ensure equitable decision-making. - Transparency and Explainability

Many AI models, particularly deep learning systems, function as “black boxes,” making it difficult to understand their decision-making processes. Advancements in explainable AI (XAI) will be crucial for building trust and accountability. - Privacy and Data Protection

AI relies on vast amounts of data, raising concerns about user privacy and security. Stricter regulations and ethical AI practices will be necessary to protect sensitive information while enabling innovation. - Accountability and Governance

As AI systems become more autonomous, determining responsibility for errors and unintended consequences becomes a challenge. Clear legal frameworks and ethical guidelines must be established to ensure accountability. - AI and Employment

The rise of AI-driven automation raises concerns about job displacement. Ethical AI development should focus on augmenting human capabilities rather than replacing them, ensuring a fair transition for workers.

Moving Towards Responsible AI

- Developing Ethical AI Guidelines

Organizations and governments must collaborate to create standardized ethical AI frameworks that promote fairness, transparency, and inclusivity. - Implementing AI Audits

Regular audits of AI systems can help identify biases, security risks, and ethical concerns, ensuring compliance with established ethical principles. - Fostering Public Awareness and Education

Increasing AI literacy among the general public and stakeholders will empower individuals to understand AI’s impact and advocate for ethical practices. - Enhancing Human-AI Collaboration

Future AI systems should be designed to work alongside humans, complementing human intelligence rather than replacing it. - Strengthening Regulations and Policies

Governments must establish and enforce regulations to ensure ethical AI deployment, balancing innovation with societal well-being.

The future of AI ethics hinges on proactive efforts to create responsible AI systems that prioritize fairness, accountability, and transparency. By addressing ethical challenges and fostering a collaborative approach, society can harness AI’s potential while minimizing risks, ensuring that AI remains a force for good in the years to come.

Final Thoughts

The ethical challenges of bias and fairness in AI systems must be addressed to build trustworthy, transparent, and inclusive AI. By improving data quality, increasing algorithmic transparency, developing fairness-aware models, and enforcing ethical regulations, we can create AI that serves all individuals fairly.

AI ethics is not just a technical issue—it is a social, legal, and moral responsibility. As AI continues to shape our world, ensuring fair and unbiased AI systems will be crucial for a more equitable future.

For more insights on AI Ethics and AI-powered technologies, stay connected with TechToGeek! 🚀