🧠 OrionRAG – The Future Agentic RAG Chatbots Built with CrewAI and LlamaIndex

🚀 Introduction: What Is OrionRAG?

In today’s AI-driven era, chatbots are evolving beyond static question–answer systems. The next big leap is Agentic RAG Chatbots, where retrieval and reasoning meet autonomy and intelligence.

OrionRAG is one such innovation — an Agentic RAG Chatbot that blends the power of CrewAI, LlamaIndex, and Streamlit into a seamless, reasoning-capable conversational system. It doesn’t just respond to questions; it thinks, retrieves, and acts like an intelligent digital agent.

Designed as a containerized AI tool, OrionRAG is deployable anywhere using Docker, making it ideal for developers, AI researchers, and enthusiasts exploring the future of autonomous retrieval-augmented systems.

Table of Contents

⚙️ Tech Stack at a Glance

| Component | Function |

|---|---|

| Streamlit | Provides an interactive chat interface |

| LlamaIndex | Handles document indexing and retrieval |

| CrewAI | Manages multiple intelligent agents |

| Python 3.10+ | Core programming environment |

| Docker | Ensures portable deployment |

| OpenAI or Local LLMs | Acts as the generative engine |

Each component complements the other, creating a cohesive ecosystem for building a high-performance Agentic RAG Chatbot.

🌟 Why Choose an Agentic RAG Chatbot?

Unlike traditional RAG systems, which simply fetch information from a vector store, Agentic RAG Chatbots like OrionRAG combine reasoning and autonomy. This enables the chatbot to:

- Understand context deeply

- Break down complex queries

- Retrieve and synthesize relevant data from multiple sources

- Form coherent, context-aware responses

This multi-step reasoning process is what makes OrionRAG stand out in the modern AI ecosystem.

🧠 Features of OrionRAG

- Agentic Intelligence: Uses CrewAI agents to reason, plan, and execute tasks intelligently.

- Efficient Retrieval: Leverages LlamaIndex for fast, accurate context fetching.

- Minimal Frontend: Built with Streamlit, offering a clean, dark-themed chat UI.

- Dockerized Deployment: Fully containerized for easy local or cloud hosting.

- Modular Design: Can be extended with new agents or data sources.

- Real-Time Querying: Instant response capability with smooth UI interaction.

- Multi-Agent Coordination: Each agent performs distinct reasoning and retrieval roles.

Together, these features make OrionRAG a powerful Agentic RAG Chatbot framework.

🧩 Installation Guide

Here’s how to set up OrionRAG in minutes.

Step 1: Clone the Repository

git clone https://github.com/subasen85/OrionRAG.git

cd OrionRAG

Step 2: Install Dependencies

pip install -r requirements.txt

Make sure your .env file contains your API key:

OPENAI_API_KEY=your_api_key_here

Step 3: Run the Chatbot Locally

streamlit run app.py

Access the Agentic RAG Chatbot interface at:

👉 http://localhost:8501

Step 4: Deploy with Docker (Recommended)

For smooth, cross-platform deployment:

docker build -t orionrag .

docker run -p 8501:8501 orionrag

Now visit http://0.0.0.0:8501 — your OrionRAG is live!

🧩 How OrionRAG Works

OrionRAG follows a modular, three-layered architecture designed for clarity, flexibility, and scalability.

🧱 1. Streamlit Frontend

The frontend serves as the user’s entry point. Its design mimics modern chat interfaces — dark background, centered input box, and quick-access buttons like “Explain about Ecosystem.”

Code Snippet (UI Setup):

import streamlit as st

st.title("🧠 OrionRAG - Agentic RAG Chatbot")

user_input = st.text_input("Ask Chatbot...")

if st.button("Explain about Ecosystem"):

user_input = "Explain about Ecosystem"

if user_input:

response = chat_with_agent(user_input)

st.write(response)

This minimalist design makes the Agentic RAG Chatbot experience both intuitive and responsive.

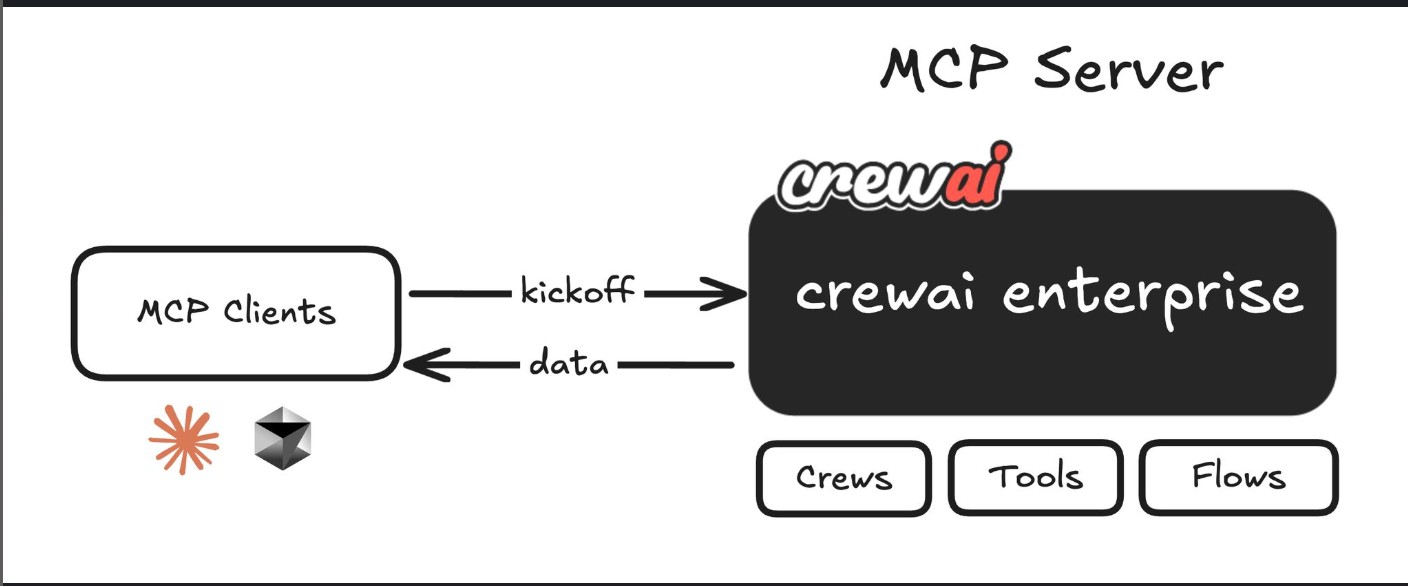

🧩 2. CrewAI Multi-Agent Backend

CrewAI powers OrionRAG’s “agentic” layer — a set of autonomous agents collaborating to interpret queries, plan responses, and synthesize answers.

Example (CrewAI Setup):

from crewai import Crew, Agent

retriever_agent = Agent(role="Retriever", goal="Fetch relevant documents")

reasoning_agent = Agent(role="Reasoner", goal="Synthesize context and derive insight")

crew = Crew(agents=[retriever_agent, reasoning_agent])

crew.run("Explain AI ecosystems")

Each agent has a defined role, enabling parallel processing and smart orchestration — the essence of an Agentic RAG Chatbot.

📚 3. LlamaIndex Retrieval Engine

LlamaIndex ensures fast, context-aware information retrieval from local or external datasets.

Snippet (Retrieval Code):

from llama_index import VectorStoreIndex, SimpleDirectoryReader

docs = SimpleDirectoryReader('data/').load_data()

index = VectorStoreIndex.from_documents(docs)

def retrieve_context(query):

return index.query(query)

Once relevant data is retrieved, it’s handed to CrewAI’s agents for reasoning and natural language generation.

🔄 The Working Pipeline

Here’s how OrionRAG processes a query:

- User Input → via Streamlit

- CrewAI Orchestration → Agents reason and plan

- LlamaIndex Retrieval → Fetches relevant content

- Synthesis → Agents merge and refine the response

- Output → Streamlit displays the answer

This flow ensures each response is both data-grounded and contextually accurate — the hallmark of an effective Agentic RAG Chatbot.

💬 Sample Interaction

User: “Explain about Ecosystem”

OrionRAG:

“An AI ecosystem involves models, tools, and data pipelines interacting to create a self-improving learning system capable of reasoning, retrieval, and autonomous task completion.”

Such adaptive reasoning reflects the true power of Agentic RAG Chatbots.

🐳 Deploying with Docker

Docker simplifies OrionRAG deployment across any platform.

Example Dockerfile:

FROM python:3.10

WORKDIR /app

COPY . /app

RUN pip install -r requirements.txt

EXPOSE 8501

CMD ["streamlit", "run", "app.py", "--server.port=8501", "--server.address=0.0.0.0"]

Run it anywhere — your Agentic RAG Chatbot behaves consistently whether on local, cloud, or Kubernetes clusters.

🔧 Extending OrionRAG

Want to enhance your Agentic RAG Chatbot further? Try these ideas:

- Integrate Local LLMs: Use Mistral, LLaMA 3, or Ollama.

- Add More Agents: Include a summarizer, web-search agent, or code generator.

- Expand Knowledge Base: Connect with Notion, SQL, or external APIs.

- Upgrade UI: Add chat history, markdown rendering, or speech input.

The flexibility of OrionRAG makes it a foundation for any autonomous AI assistant project.

❓ Frequently Asked Questions (FAQ)

Q1. What makes OrionRAG an Agentic RAG Chatbot?

It uses CrewAI agents that can reason, plan, and retrieve autonomously — beyond simple question answering.

Q2. Can I run OrionRAG on CPU?

Yes, you can. While GPU boosts performance, CPU deployment works perfectly fine for moderate usage.

Q3. Is it possible to add my own datasets?

Absolutely. You can load local PDFs, CSVs, or web content via LlamaIndex for custom retrieval.

Q4. Which LLMs are supported?

Both API-based (like OpenAI) and local open-weight models (like LLaMA 3) are supported.

Q5. How can I contribute?

Visit the GitHub repo → https://github.com/subasen85/OrionRAG, fork it, experiment, and submit pull requests.

🏁 Conclusion

OrionRAG exemplifies how an Agentic RAG Chatbot can redefine the chatbot experience by merging retrieval, reasoning, and autonomy.

With CrewAI, LlamaIndex, and Streamlit, it provides an elegant yet powerful architecture for creating intelligent digital agents that understand, learn, and respond meaningfully.

Whether you’re a developer exploring AI frameworks or an enterprise building smart assistants, OrionRAG offers the perfect blueprint for your next-gen conversational system.

🔗 GitHub Repository: https://github.com/subasen85/OrionRAG

📦 Category: Artificial Intelligence & Machine Learning

🧩 Published by: TechToGeek.com

✍️ Author: SenthilNathan S