In today’s rapidly evolving world of AI and Large Language Models (LLMs), the term “Prompt Engineering” has become one of the hottest topics. Whether you’re working with OpenAI, Anthropic, or Llama-based models, crafting the right prompts determines how “intelligently” your model responds.

Recently, while experimenting with LlamaIndex, I explored the concepts of PromptTemplate, Zero-Shot Prompting, and Few-Shot Prompting — and how they dramatically influence model outputs. In this post, I’ll share what I learned, along with examples and explanations, so you can start designing better prompts in your AI projects.

Table of Contents

🌟 What is Prompt Engineering?

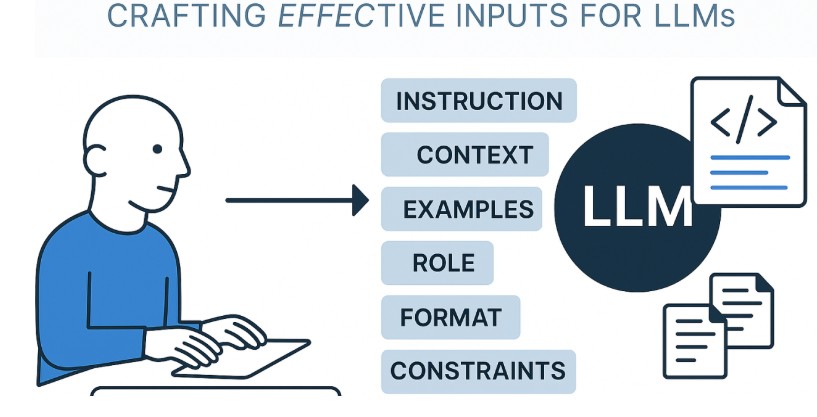

Prompt Engineering is the art and science of designing input text that helps Large Language Models (LLMs) generate the desired output.

Think of it as giving precise instructions to a super-intelligent assistant — the clearer your instruction, the better the response.

For example:

❌ Poor Prompt: “Write about AI.”

✅ Better Prompt: “Write a 200-word blog introduction on how AI is transforming healthcare using real-world examples.”

That small difference in wording can completely change the model’s tone, depth, and quality.

🧩 Why Prompt Engineering Matters

LLMs like GPT-4, Claude, or Llama-3 don’t “understand” your intent in a human sense — they pattern-match from huge datasets. So the prompt structure defines:

- The context (background or setup)

- The task (what to do)

- The format (how to respond)

- The constraints (word limit, tone, examples, etc.)

A well-structured prompt helps achieve accuracy, consistency, and control in AI responses. That’s where tools like LlamaIndex’s PromptTemplate come in handy.

🧠 Understanding LlamaIndex’s PromptTemplate

When building AI applications, especially RAG (Retrieval-Augmented Generation) systems, you’ll often reuse prompts for different queries. Writing them manually every time is inefficient.

LlamaIndex solves this through PromptTemplate, which allows you to define and reuse prompt patterns with placeholders.

🧩 Example: Using PromptTemplate in LlamaIndex

Here’s a small example:

from llama_index.core.prompts import PromptTemplate

# Define your reusable prompt

template_str = (

"You are a helpful assistant. "

"Use the provided context to answer the question.\n\n"

"Context: {context}\n"

"Question: {query}\n"

"Answer:"

)

# Create a prompt template

prompt = PromptTemplate(template=template_str)

# Fill the template dynamically

formatted_prompt = prompt.format(context="Python is a programming language.", query="Who created Python?")

print(formatted_prompt)

Output:

You are a helpful assistant. Use the provided context to answer the question.

Context: Python is a programming language.

Question: Who created Python?

Answer:

Now, you can pass this formatted prompt directly to your LLM.

This approach ensures consistency, clarity, and scalability across your application.

⚙️ How It Works

Let’s break down what happens behind the scenes when using PromptTemplate:

- Template Definition:

You design a structured prompt pattern with variables ({context},{query}, etc.). - Dynamic Substitution:

During runtime, the variables get replaced with actual user input or data fetched from your database/vector store. - Prompt Execution:

The formatted string is then passed to the LLM via an API call. - Response Generation:

The LLM interprets the prompt, generates a response, and returns it in your desired format (text, JSON, etc.).

This makes it incredibly easy to integrate prompt logic into pipelines, especially for Agentic or RAG (Retrieval-Augmented Generation) architectures — where prompts are dynamically generated for each user query.

🧩 Zero-Shot Prompting: When the Model Learns on Its Own

Zero-Shot Prompting is the simplest form — you ask the model to perform a task without providing any examples.

For instance:

prompt = "Translate the following English sentence to French: 'How are you today?'"

The model has never seen an example, yet it performs the translation correctly because it has been trained on vast multilingual data.

✅ When to Use Zero-Shot Prompting

- When the model already has sufficient prior knowledge.

- For straightforward tasks (translation, summarization, sentiment analysis).

- When you want conciseness and flexibility.

⚠️ Limitations

- Can produce inconsistent answers for ambiguous tasks.

- Lacks style or context-specific alignment.

🧪 Few-Shot Prompting: Teaching by Example

Few-Shot Prompting means giving the model a few examples before asking your actual question.

This helps the model understand format, tone, and context.

Example:

prompt = """

Classify the sentiment of the following reviews as Positive, Negative, or Neutral.

Example 1:

Text: "The movie was fantastic and thrilling."

Sentiment: Positive

Example 2:

Text: "The food was cold and bland."

Sentiment: Negative

Example 3:

Text: "The weather is fine today."

Sentiment:

"""

Here, the model sees examples (few shots) and can easily infer that the correct answer is Neutral.

✅ When to Use Few-Shot Prompting

- For nuanced tasks where context matters.

- When you want consistent output formatting.

- When the model needs guidance on tone or structure.

⚙️ Combining with LlamaIndex

You can combine few-shot prompting with PromptTemplate:

from llama_index.core.prompts import PromptTemplate

examples = """

Example 1:

Text: "I love this product!"

Sentiment: Positive

Example 2:

Text: "It’s not working as expected."

Sentiment: Negative

"""

template_str = """

You are a sentiment classifier.

{examples}

Text: "{input_text}"

Sentiment:

"""

prompt = PromptTemplate(template=template_str)

formatted = prompt.format(examples=examples, input_text="The product is okay.")

print(formatted)

This approach makes your LlamaIndex-powered app more context-aware and adaptive.

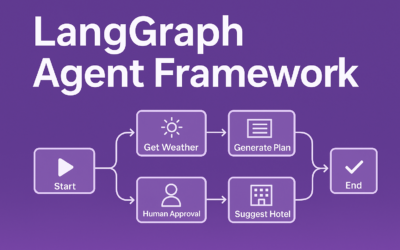

🚀 Practical Use Case: Prompting in an Agentic RAG System

In Agentic RAG (Retrieval-Augmented Generation) systems, you can assign agents specific prompt templates for their roles — for example:

- Retriever Agent: Retrieves most relevant context from vector DB.

- Summarizer Agent: Uses a prompt to compress retrieved context.

- Responder Agent: Uses PromptTemplate to generate the final answer.

This modular design lets your AI system reason, summarize, and respond intelligently — exactly how frameworks like CrewAI or LangGraph manage autonomous agents.

🧩 Best Practices for Effective Prompt Design

- Be Explicit: State what you want clearly. Avoid vague requests.

- Define Output Format: Use structure, e.g., “Return output in JSON.”

- Use Role Play: Start prompts with “You are a… (teacher, data analyst, chatbot)” to set context.

- Chain Prompts: Use multiple smaller prompts instead of one large one for better control.

- Iterate & Refine: Test and adjust prompts — small wording changes can make big differences.

💻 Sample Workflow: From Template to Execution

Here’s how you might integrate this concept in an actual app:

from llama_index.llms.openai import OpenAI

from llama_index.core.prompts import PromptTemplate

# Define template

template = """

You are a helpful data assistant.

Summarize the following text in 3 sentences:

{text}

"""

prompt = PromptTemplate(template=template)

# Fill prompt dynamically

user_text = "Artificial Intelligence is transforming industries..."

formatted_prompt = prompt.format(text=user_text)

# Send to model

llm = OpenAI(model="gpt-4-turbo")

response = llm.complete(formatted_prompt)

print(response)

This workflow connects structured prompt templates to real-time model execution — an essential part of scalable AI systems.

❓ FAQ: Prompt Engineering Essentials

1. What is the difference between PromptTemplate and simple string formatting?

While both insert variables into text, PromptTemplate adds type checking, integration with AI workflows, and reusability across LlamaIndex pipelines.

2. Which is better: Zero-Shot or Few-Shot Prompting?

It depends on your task.

- Use Zero-Shot for general queries or factual questions.

- Use Few-Shot when you want consistent style or structured output.

3. Can I use PromptTemplate with OpenAI models?

Absolutely. LlamaIndex acts as a wrapper around many backends (OpenAI, Anthropic, HuggingFace, etc.), and PromptTemplate works with all of them seamlessly.

4. What if my prompts are too long?

You can use retrieval-based summarization or context compression in LlamaIndex to reduce token size while preserving meaning.

5. How can I debug prompts?

Try logging formatted prompts before sending them to the LLM. You can also use PromptLayer or LangSmith for visual debugging and tracking.

🏁 Conclusion

Prompt Engineering is not just a “skill” — it’s a core capability for building intelligent, controllable AI systems.

By mastering tools like PromptTemplate from LlamaIndex, and techniques like Zero-Shot and Few-Shot Prompting, you can build AI assistants, chatbots, and RAG systems that truly understand user intent and respond meaningfully.

As you continue your AI journey, remember — the best prompts are born through experimentation.

So, tweak, test, and iterate until your AI truly sounds like you.

Written and Published by TechToGeek.com

Author: SenthilNathan S — AI Enthusiast, Developer

Thanks !!

Interesting analysis! Seeing data-driven approaches !