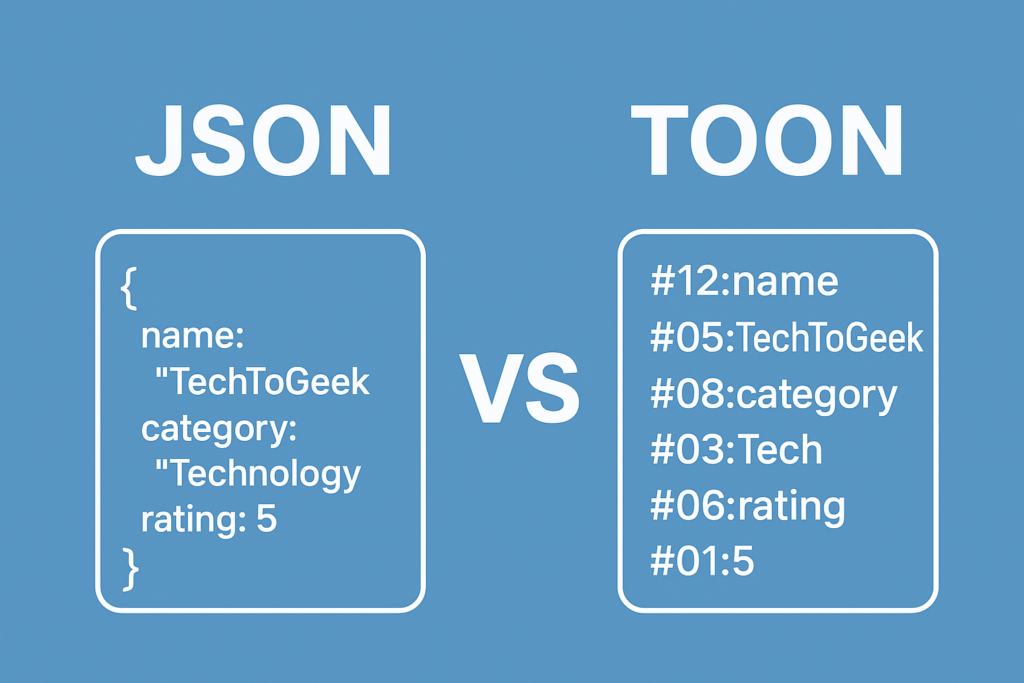

Toon over Json:A For years, JSON (JavaScript Object Notation) has been the standard for structuring and transmitting data across APIs, applications, and AI pipelines. However, modern AI workloads are no longer satisfied with the overhead and limitations of JSON. This is where TOON—Token-Oriented Object Notation—steps in as a next-generation solution. Artificial Intelligence (AI) systems are evolving rapidly, and the demand for faster, cleaner, and more efficient data processing is higher than ever.

In this blog, we explore TOON vs JSON, why TOON is emerging as a superior choice for AI developers, and how it improves speed, memory efficiency, accuracy, and large-scale data handling. If you’re an AI engineer, data scientist, or backend developer, understanding why TOON vs JSON matters will give you a significant performance advantage.TOON (Token-Oriented Object Notation) is a new, lightweight data format for AI, designed to replace verbose formats like JSON or YAML to drastically cut token usage and costs for Large Language Models (LLMs). It uses a tabular, indentation-based structure, similar to CSV, declaring keys once and listing values in rows, making it highly efficient for structured data like product catalogs or analytics, reducing data size by 30-60% for faster processing and lower API fees.

Table of Contents

What Is JSON? (Quick Overview)

JSON is a human-readable format widely used across the web. It is:

- Text-based

- Easy to debug

- Supported everywhere

- Human-friendly

A typical JSON example looks like:

{

"name": "TechToGeek",

"type": "Technology Blog",

"rating": 5

}

While JSON is simple and universal, it comes with major drawbacks—especially in AI systems where speed, compression, and parsing efficiency matter.

What Is TOON (Token-Oriented Object Notation)?

TOON is a token-driven data format that replaces verbose keys with compact tokens. It prioritizes:

- Performance

- Compression

- Scalability

- Low-memory usage

A TOON representation might look like:

name:

TechToGeek

type:

Technology Blog

rating:

5

Or for lists of uniform objects (like products):

sku | qty | price

--- | --- | ---

A123 | 5 | 1.99

B456 | 2 | 3.50TOON is not designed for human readability; it is designed for machine performance, making it highly suitable for AI workloads.

Why AI Developers Should Prefer TOON Over JSON

Below are the key reasons TOON vs JSON often results in TOON providing significantly better performance inside AI systems.

1. TOON Provides Faster Data Ingestion

AI systems rely on real-time data from:

- APIs

- Sensors

- Message queues

- Logs

- Data streams

TOON structures are 30–60% smaller compared to JSON. This means:

- Faster network transfers

- Less waiting time

- Faster GPU/CPU data feeding

This is critical for AI applications like:

- Autonomous navigation

- High-frequency trading

- Real-time analytics

- Speech recognition

- IoT device data

In the comparison of TOON vs JSON, TOON clearly wins in ingestion speed.

2. Parsing TOON Requires Less Computation

JSON parsing is string-heavy. Every key is processed as text, which wastes CPU cycles.

TOON parsing uses:

- Predefined tokens

- Numeric references

- Minimal string operations

This results in:

- Higher throughput

- Faster deserialization

- Lower latency

AI pipelines, especially those that process millions of events per minute, benefit enormously.

3. TOON Improves Memory Efficiency

AI systems need RAM for:

- Model weights

- Tensors

- Feature maps

- Embeddings

JSON consumes more memory because:

- Each key is repeated

- Formatting characters add overhead

- Full strings must be stored in memory

TOON replaces these strings with compact tokens, giving AI systems:

- More available RAM

- Bigger batch sizes

- Faster model response times

For large-scale AI, the memory savings make a massive difference in performance.

4. Cleaner Data = Better AI

A major problem in AI is data inconsistency.

JSON often introduces:

- Ambiguous types

- Formatting errors

- Variability in string length

- Whitespace irregularities

TOON eliminates these issues because tokens enforce:

- Strict type rules

- Consistent structure

- Zero ambiguity

Cleaner data means:

- Better training

- Better accuracy

- Fewer preprocessing steps

This alone makes a strong case for TOON vs JSON.

5. TOON Is Perfect for Edge AI and IoT

Edge devices have:

- Limited CPU

- Minimal storage

- Constrained memory

- Bandwidth restrictions

TOON’s compact and lightweight structure allows:

- Faster sensor data handling

- Lower transmission costs

- Low-power AI inference

- Quick event responses

In TOON vs JSON, TOON is the clear winner for:

- Smart cameras

- Drones

- Wearables

- Smart home devices

- Vehicle sensors

6. TOON Accelerates Streaming AI Systems

AI today is shifting from batch processing to streaming.

Examples:

- Live speech recognition

- Fraud detection

- Traffic monitoring

- Social media sentiment analysis

In streaming environments:

- JSON slows down pipelines

- JSON increases bandwidth cost

- JSON increases CPU usage

But TOON:

- Reads tokens sequentially

- Minimizes payload size

- Reduces overhead

- Supports high-frequency events

This makes TOON especially valuable in event-driven AI architectures.

7. Lower Infrastructure Cost

Because TOON reduces:

- Data size

- CPU load

- Memory usage

- Bandwidth consumption

Companies can:

- Use fewer servers

- Reduce cloud bills

- Scale cheaper

- Run more AI models in parallel

In large systems, the cost savings are substantial.

TOON vs JSON: Side-by-Side Comparison

| Feature | JSON | TOON |

|---|---|---|

| Readability | High | Low |

| Data Size | Medium | Very Small |

| Parsing Speed | Moderate | Very Fast |

| Memory Usage | High | Low |

| Human Friendly | Yes | No |

| AI-Optimized | No | Yes |

| Best Use | Web APIs | AI, Streaming, IoT |

Why TOON Is the Future of AI Data Formats

As AI transitions into:

- Real-time inference

- High-volume data handling

- Lightweight edge computing

TOON vs JSON becomes a crucial decision.

JSON remains great for general applications, but TOON is:

- Faster

- Cleaner

- More efficient

- Better aligned with AI’s growing needs

AI developers who adopt TOON early can build systems that:

- Scale more efficiently

- Process data faster

- Deliver lower latency

- Reduce operational costs

✅ How OpenAI, Groq, and Claude Would Use TOON (Token-Oriented Object Notation)

OpenAI, Groq, and Claude do NOT publicly use TOON (Token-Oriented Object Notation) today.

But we can realistically explain how they would use TOON if adopted—based on how LLMs, high-token-throughput systems, and inference engines work internally.

TOON is designed for token-efficient, machine-oriented data exchange, unlike JSON, which is structured for human readability.

TOON becomes extremely valuable wherever the system must process massive volumes of tokens per second, especially for:

- LLM inference

- Embedding retrieval

- Multi-agent communication

- Vector database exchange

- High-speed API responses

Let’s break it down per company.

🟦 1. How OpenAI Could Use TOON

OpenAI’s GPT-4, GPT-4o, and GPT-5 models run at extremely high token throughput and rely heavily on:

- Efficient input formatting

- System prompts

- Multi-turn memory

- Function calling / structured outputs

- Agent-to-agent communication

🎯 TOON Use Cases for OpenAI

1. Faster Function Calling

Current OpenAI function-calling uses JSON which has:

- Unnecessary brackets

- Repeated key names

- Verbose nesting

- High parsing overhead

With TOON:

The function schema could be encoded in a fixed set of token identifiers, reducing input size by ~40–70%.

2. More Efficient Reasoning Traces

GPT models generate internal hidden chain-of-thought tokens.

Today: JSON-like structures slow down logging & sampling.

With TOON: logs become extremely compact.

3. Multi-Agent OpenAI Systems (e.g., OpenAI o1)

OpenAI’s reasoning models exchange structured messages internally.

Using TOON:

- Smaller message size

- Faster round-trip loops

- Higher reasoning depth

- Lower compute cost

4. RAG (Retrieval) with Structured Context

OpenAI embeddings return large arrays and metadata.

TOON makes this lightweight.

Result:

Cheaper inference + faster responses.

🟧 2. How Groq Could Use TOON

Groq builds ultra-fast LLM inference hardware achieving 500 tokens/sec+ and aims for structured, low-latency communication.

This makes TOON especially valuable.

🎯 TOON Use Cases for Groq

1. Zero-Latency Token Streaming

Groq thrives on deterministic, low-latency pipelines.

TOON eliminates parsing overhead, enabling:

- Lower jitter

- More stable latency

- Faster token delivery

2. Internal Graph Compiler & Model Loading

Groq’s compiler consumes model graphs and metadata.

TOON = faster loading, especially for:

- Multi-modal processors

- Kernel execution graphs

- Memory allocation metadata

3. Multi-Model Serving

Groq servers run multiple LLMs (Mixtral, Llama, Gemma).

TOON enables:

- Smaller RPC payloads

- Faster routing

- Reduced CPU bottlenecks

This leads to more throughput per dollar.

🟩 3. How Claude (Anthropic) Could Use TOON

Claude 3 focuses on:

- Constitutional AI

- Long-context reasoning (up to 1M tokens)

- In-depth structured outputs

- Multi-document processing

This makes TOON perfect for structured contexts.

🎯 TOON Use Cases for Claude

1. Handling 1M-Token Context Windows

JSON breaks down with huge context windows due to:

- Repetition

- Verbosity

- Deep nesting

TOON drastically shrinks context size.

Result:

Claude can process bigger documents with same hardware cost.

2. Constitutional Reasoning Rules

Anthropic models use constitutional rule sets.

In TOON form:

- Rules are stored as compact tokens

- Faster interpretation

- More efficient internal routing

3. Multi-Document Q&A

Claude excels at analyzing PDFs, docs, spreadsheets.

TOON would enable:

- Smaller metadata

- Faster chunk transfer

- Lower parsing time

🧩 Summary Table — How They Would Use TOON

| Company | How They Use JSON Today | How They Would Use TOON | Benefit |

|---|---|---|---|

| OpenAI | Function calling, agent messaging, logs | Token-efficient calls & agent messages | Lower cost, faster responses |

| Groq | API routing, streaming | Near-zero-latency structured tokens | Ultra-fast streaming |

| Claude (Anthropic) | Constitutional rules, long context | Compact context & structured data | Bigger context, cheaper inference |

🧠 Why They Will Eventually Shift to TOON

Because:

- JSON is too verbose

- LLMs process tokens, not text

- TOON reduces redundancy

- TOON aligns with token-native learning

- TOON makes agent communication efficient

The shift will mirror the transition from:

- XML → JSON

- REST → gRPC

- CPU → GPU → TPU → Groq

TOON is simply the next step in evolution.You can watch the you video.

Conclusion

TOON is not designed to replace JSON for everyday web development. But for AI workloads—where speed, efficiency, and performance matter—TOON offers clear advantages. When comparing TOON vs JSON, TOON enables AI systems to become:

- Faster

- Smarter

- More efficient

- More scalable

- More reliable

As AI continues to grow, TOON will become a preferred choice for high-performance, high-frequency, and resource-sensitive applications.

A solid comprehension of the rules !!

The need for efficient data processing is definitely growing as AI evolves.It makes sense to consider options like TOON for cleaner data handling.