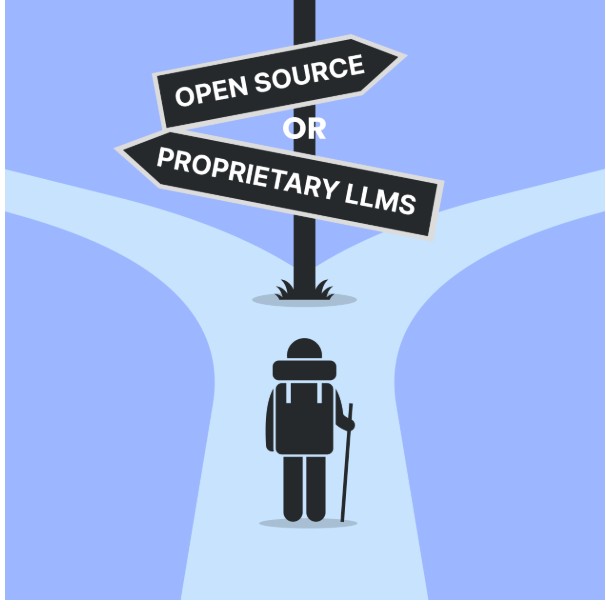

Local vs. Open-Source vs. Proprietary LLMs: Out of 3,Which One Should You Choose?

Local vs. Open-Source vs. Proprietary LLMs: Which One Should You Choose? This is the question,Artificial Intelligence (AI) has entered a revolutionary phase with the rise of Large Language Models (LLMs) — the brains behind today’s most advanced chatbots, coding assistants, and enterprise automation tools.

Whether you’re a developer, researcher, or business owner, one critical question stands out:

Should you choose a Local LLM, an Open-Source LLM, or a Proprietary LLM?

Each type has its strengths, limitations, and ideal use cases. In this post, we’ll break down what LLMs are, how they work, and how to decide which option fits your needs best.

Table of Contents

What is an LLM?

A Large Language Model (LLM) is an advanced AI system trained on massive amounts of text data to understand, generate, and interact using human language.

These models rely on deep learning architectures, specifically transformers, to predict the next word in a sequence, summarize text, translate languages, or even write code.

At their core, LLMs are built using neural networks with billions (or even trillions) of parameters. These parameters help the model learn patterns, grammar, reasoning, and context from the data it’s trained on.

Key Capabilities of LLMs:

- Natural language understanding (NLU)

- Text generation and summarization

- Sentiment analysis

- Code generation and debugging

- Question answering

- Reasoning and decision-making assistance

Popular LLM Examples:

- GPT-4 (by OpenAI) – Proprietary, cloud-based, used in ChatGPT and Copilot.

- Claude 3 (by Anthropic) – Proprietary model focused on safety and reasoning.

- Llama 3 (by Meta) – Open-source and free to use locally or on the cloud.

- Mistral 7B (by Mistral AI) – Lightweight, open-source, and performance-efficient.

- Gemma 2B (by Google DeepMind) – Compact model optimized for local deployment.

How LLMs Work (A Simplified View)

Every LLM undergoes three critical phases:

- Pretraining:

The model learns from vast text corpora — books, websites, research papers — to recognize patterns and relationships between words. - Fine-Tuning:

The pretrained model is adjusted for specific tasks (like chatbots, summarization, or coding assistance) using curated datasets. - Inference:

Once trained, the model can generate text, respond to queries, or assist users based on learned knowledge.

Modern LLMs use transformer-based architectures like Attention Mechanisms, which allow the model to “focus” on relevant parts of the text for accurate responses.

The Rise of Local LLMs

Local LLMs are AI models that run directly on your personal computer or private server, without depending on cloud access.

This makes them ideal for privacy-sensitive or offline environments.

Advantages of Local LLMs

- Full Data Privacy: Your data never leaves your system.

- Offline Operation: Useful in restricted or high-security environments.

- Customizability: Developers can fine-tune the model for specific workflows.

- No Usage Limits: You aren’t bound by API quotas or subscription plans.

Limitations

- Hardware Requirements: Running large models locally requires high-end GPUs or optimized CPUs.

- Maintenance: Updates and improvements must be handled manually.

- Scalability: Harder to scale for multiple users or enterprise setups.

Examples

- Mistral 7B or Llama 3 8B running locally using frameworks like Ollama, LM Studio, or GPT4All.

- Private AI assistants or on-premise chatbots for corporate environments.

Understanding Open-Source LLMs

Open-source LLMs are models whose architecture, weights, and training data (partially or fully) are publicly available.

They promote transparency, collaboration, and community-driven innovation.

Advantages of Open-Source LLMs

- Free and Flexible: Anyone can modify or fine-tune the model.

- Transparent Development: Researchers can inspect and improve the model.

- Innovation-Friendly: Open collaboration accelerates progress.

- Integration Freedom: Can be embedded into apps, websites, or internal tools without licensing restrictions.

Limitations

- Quality Variance: Some open-source models may not match commercial-level performance.

- Security Risks: Without proper governance, they may expose vulnerabilities.

- Support: Limited official support compared to proprietary models.

Examples

- Llama 3, Falcon 180B, Mistral 7B, and Gemma 2B.

These models can be fine-tuned or quantized for use in smaller devices or local systems.

Proprietary LLMs Explained

Proprietary LLMs are commercial AI models developed and owned by private companies like OpenAI, Google, or Anthropic.

They are usually accessible through API subscriptions or cloud platforms and offer state-of-the-art accuracy, reliability, and continuous updates.

Advantages of Proprietary LLMs

- High Accuracy and Performance: Trained on large, curated datasets.

- Regular Updates: Backed by enterprise-level R&D teams.

- Integrated Ecosystem: Plug-and-play integration with other cloud services.

- Enterprise Support: SLA guarantees, data compliance, and technical assistance.

Limitations

- Data Privacy Concerns: User data might be processed or logged on company servers.

- Cost: API-based models can become expensive at scale.

- Limited Control: No access to model weights or architecture.

- Dependency: Businesses become tied to one vendor.

Examples

- GPT-4 (OpenAI) – Powering ChatGPT, Microsoft Copilot.

- Claude 3 (Anthropic) – Known for safety and reasoning.

- Gemini (Google DeepMind) – Integrated with Google Workspace and Android ecosystem.

Choosing the Right LLM for Your Needs

Selecting the best LLM depends on your use case, budget, and technical expertise.

Here’s a simplified breakdown:

| Category | Best For | Pros | Cons | Examples |

|---|---|---|---|---|

| Local LLM | Privacy-first users, researchers, and small teams | Offline use, data privacy, no recurring costs | Needs high hardware & manual setup | Llama 3, Mistral 7B, Gemma 2B |

| Open-Source LLM | Developers, startups, and academics | Free, modifiable, community support | Quality varies, limited support | Falcon, Llama 3, Mistral |

| Proprietary LLM | Enterprises, commercial apps | Best accuracy, security compliance, managed updates | Expensive, less control | GPT-4, Claude 3, Gemini |

Use Cases for Each Type

Local LLMs

- Offline chat assistants

- Secure enterprise chatbots

- AI for healthcare or legal sectors where data can’t leave premises

Open-Source LLMs

- Academic research and experimentation

- Startup AI tools (content generation, summarization)

- Fine-tuning for niche languages or industries

Proprietary LLMs

- Customer support automation

- AI writing assistants

- Coding copilots and enterprise document summarization tools

Future of LLMs

The next wave of LLM development is moving toward hybrid models — combining the privacy of local deployment with the power of cloud-based inference.

Advancements like parameter-efficient fine-tuning (PEFT) and quantization are making LLMs smaller and more efficient, allowing them to run on everyday hardware.

We can expect:

- Seamless AI model interoperability between cloud and edge.

- Energy-efficient inference optimized for consumer devices.

- Responsible AI frameworks focusing on fairness, transparency, and safety.

- Agentic AI systems — autonomous LLMs that can perform multi-step reasoning and take actions.

FAQs

1. What does LLM stand for in AI?

LLM stands for Large Language Model, a type of AI trained to understand and generate human-like text.

2. Is it safe to use Local LLMs?

Yes. Local LLMs are the most privacy-friendly option since your data stays on your device or server.

3. Are open-source LLMs free to use commercially?

Most are free under licenses like Apache 2.0 or MIT, but always check specific model licenses before commercial deployment.

4. Can I fine-tune a proprietary LLM?

No. Proprietary models are closed systems; however, some offer API-based customization (like OpenAI’s fine-tuning feature).

5. Which LLM is best for beginners?

For newcomers, Llama 3 (Meta) or Gemma 2B (Google) are great open-source starting points due to their small size and active communities.

Final Thoughts

The choice between Local, Open-Source, and Proprietary LLMs ultimately depends on your priorities.

If privacy and control are your top concerns — go local.

If you value freedom and experimentation — open-source is your best bet.

If you need reliability and enterprise-grade performance — proprietary models lead the way.

The AI landscape is evolving rapidly, and hybrid approaches that blend the strengths of all three will likely dominate the future.

No matter which path you choose, understanding these distinctions will help you make informed decisions and build AI systems that align with your goals.

Published by TechToGeek.com