SafeChatAI: A Local ChatGPT Clone: Is it great or not so great?: 5 Steps to install

Introduction

As generative AI becomes the backbone of modern digital interaction, developers are looking for secure, customizable, and private chatbot solutions that can run locally. This inspired the creation of SafeChatAI — a Local ChatGPT Clone that combines the power of Streamlit, Python, and MongoDB to deliver an intuitive AI chat experience right from your desktop.

SafeChatAI is designed for developers, AI researchers, and tech enthusiasts who want to explore the capabilities of conversational AI without depending on external cloud services. By running entirely on your local environment, it provides data privacy, faster responses, and custom model control, making it a promising GenAI product for personal and enterprise use.

Table of Contents

What is SafeChatAI?

SafeChatAI is a Local ChatGPT Clone that lets you interact with AI models directly from your machine. The system is built using:

- Frontend: Streamlit (for creating the user interface)

- Backend: Python (for processing and model handling)

- Database: MongoDB (for chat history and user data storage)

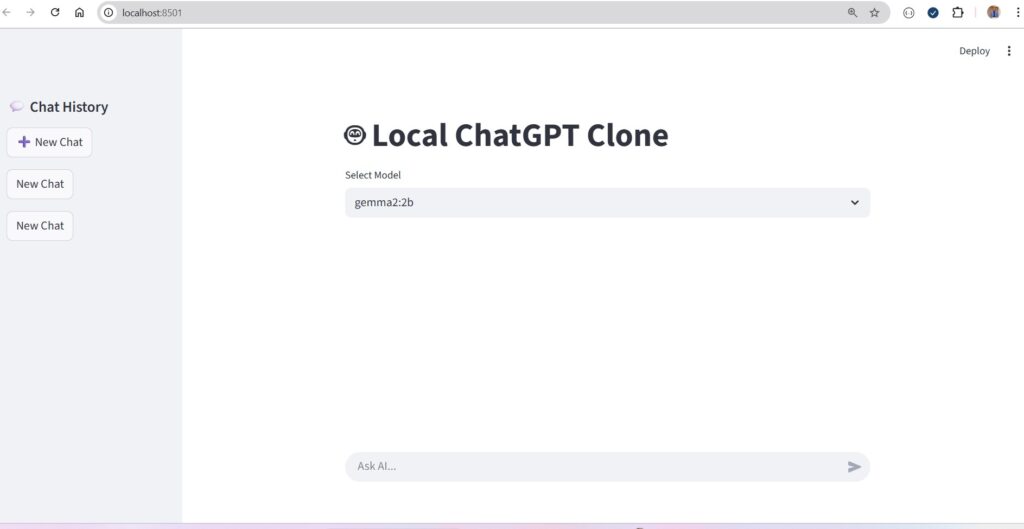

From the attached screenshot, you can see its elegant and minimalistic interface, featuring a chat history panel, new chat creation buttons, and an AI response area — similar to ChatGPT’s layout but running locally on localhost:8501.

SafeChatAI is not just a UI demo — it’s a complete, production-ready GenAI product that showcases the integration of open-source AI models with real-time conversational ability.

Key Features of SafeChatAI

✅ Local AI Chat:

Interact with AI models without sending data to the cloud, ensuring 100% data privacy.

✅ Streamlit Interface:

A responsive and user-friendly interface built using Python’s Streamlit framework.

✅ MongoDB Integration:

Securely store your chat history and sessions locally or in a private database.

✅ Model Flexibility:

Supports switching between local or API-based models (like gemma2:2b or custom LLMs).

✅ Open Source & Extensible:

Completely open-source and available on GitHub for developers to modify and expand.

👉 GitHub: https://github.com/subasen85/SafeChat-AI.git

✅ Lightweight & Fast:

No heavy dependencies — can run efficiently on a standard laptop.

Installation Guide

Installing SafeChatAI is simple and straightforward. You can set it up in minutes with Python and Streamlit.

Step 1: Clone the Repository

git clone https://github.com/subasen85/SafeChat-AI.git

cd SafeChat-AI

Step 2: Create a Virtual Environment

python -m venv venv

source venv/bin/activate # for Mac/Linux

venv\Scripts\activate # for Windows

Step 3: Install Required Dependencies

pip install -r requirements.txt

Step 4: Configure MongoDB

- Install and start MongoDB locally or use MongoDB Atlas.

- Update your MongoDB connection string in the Python backend file (usually

app.pyorconfig.py).

Example connection:

client = pymongo.MongoClient("mongodb://localhost:27017/")

db = client["safechatai"]

collection = db["chat_history"]

Step 5: Run SafeChatAI

streamlit run app.py

Once launched, open your browser and go to:

http://localhost:8501

🎉 You’ll now see the SafeChatAI interface ready for interaction!

How It Works

SafeChatAI integrates frontend, backend, and database layers seamlessly. Let’s look at each component.

1. Streamlit Frontend

The Streamlit interface serves as the main user interaction layer. It handles:

- Displaying previous chat sessions

- Taking user input

- Displaying AI responses in real-time

Example snippet:

import streamlit as st

st.title("🧠 SafeChatAI - Local ChatGPT Clone")

user_input = st.text_input("Ask AI...")

if st.button("Send"):

response = get_ai_response(user_input)

st.write(response)

2. Python Backend

The backend is responsible for managing the chat logic, connecting to the model, and saving conversations.

from transformers import pipeline

def get_ai_response(user_input):

chatbot = pipeline("text-generation", model="google/gemma-2b")

response = chatbot(user_input, max_length=200, do_sample=True)

return response[0]['generated_text']

This function sends user input to a model (such as Gemma2:2b) and returns an AI-generated reply.

3. MongoDB Database

MongoDB stores the chat history and allows users to retrieve previous sessions.

chat_record = {

"user_input": user_input,

"ai_response": response,

"timestamp": datetime.now()

}

collection.insert_one(chat_record)

This ensures conversations are persistent across sessions, giving a consistent chat experience.

User Interface Overview

The screenshot demonstrates SafeChatAI’s Streamlit-based interface featuring:

- A chat history sidebar for managing multiple sessions.

- A main chat window for user and AI conversations.

- A dropdown menu to select AI models such as

gemma2:2b.

This clean design closely mimics modern AI chat platforms, with an emphasis on simplicity and local functionality.

Benefits of SafeChatAI

- Privacy: No cloud processing — all data stays on your local machine.

- Open-Source Flexibility: Fully customizable for developers and AI enthusiasts.

- Low-Cost AI Development: Avoid API costs by running models locally.

- Educational Value: Ideal for students learning about NLP, Streamlit, and GenAI.

- Offline AI Experience: Continue chatting with your AI even without an internet connection.

Potential Use Cases

- AI Learning Projects: Experiment with conversational AI architecture.

- Enterprise Chatbots: Deploy internal AI chat systems for company use.

- Data Privacy Research: Study local AI deployments for compliance and governance.

- Developers & Educators: Use it as a teaching tool for LLM integration.

- Prototype Building: Extend the framework for domain-specific bots (e.g., Finance AI, Education AI).

Upcoming Improvements

The developer plans to enhance SafeChatAI with:

- Model switching between local and API-based models (OpenAI, Ollama, etc.)

- Enhanced MongoDB analytics for chat insights.

- User authentication and admin dashboard.

- Integration with speech-to-text and text-to-speech APIs.

- GPU acceleration for faster local model inference.

FAQs

1. What is SafeChatAI?

SafeChatAI is a local AI chatbot similar to ChatGPT, built using Streamlit, Python, and MongoDB for private, offline use.

2. Is SafeChatAI open-source?

Yes, it’s fully open-source and available on GitHub: SafeChat-AI.

3. Do I need an internet connection to run it?

No, once installed, you can run SafeChatAI entirely offline.

4. Can I connect it to different models?

Yes, you can modify the backend to load various models such as Hugging Face, LLaMA, or custom fine-tuned models.

5. Is it suitable for beginners?

Absolutely. SafeChatAI’s clean code structure and Streamlit UI make it beginner-friendly for those learning AI development.

Final Thoughts

SafeChatAI is more than a side project — it’s a glimpse into the future of private and local generative AI. By combining Streamlit’s simplicity, Python’s flexibility, and MongoDB’s scalability, this project demonstrates how powerful conversational models can run securely within your environment.

Whether you’re a developer exploring GenAI, a student learning about AI pipelines, or a researcher testing local inference, SafeChatAI provides the perfect foundation for experimentation and learning.

Published by TechToGeek.com — your destination for AI, coding, and technology innovation.